This is an on-going notes and subject to change. Last updated: 2017/01/13

1. Introduction

Optimization is inherently tied with machine learning because what “learning” means is essentially about minimizing an objective function associated with the problem of interest. In fact, the resurgence of neural network stems from various inventions that allow neural network optimization easier, faster, and better (generalization); Dropout, ReLU activation, Batch Normalization, LSTM (for RNN to avoid gradient explosion/vanishing) to name a few.

One of the fundamental questions with regard to optimization in deep neural network is the following: Why is finding local minima enough? Since it is virtually impossible to find analytical solutions in high dimensional non-convex optimization problems, we have to rely on iterative methods, which doesn’t necessarily gurantee to give us good solutions. The dominanting optimization algorithms in deep learning as of January 2017 are all variants of stochasitc gradient descent; Adam, AdaGrad, and RMSProp etc. However, since SGD uses only local information to update the current estimates, it is likely that the algorithm will get stuck around local minima. In practice, however, local minima found by SGD-type algorithms with appropriate hyperparameter tuning are good enough to achieve impressive results in many real tasks.

It is hypothesized that the reason why local minima are good enough is that there is no “bad” local minima. That is, all local minima are very close to global minima in the error surface of deep neural network. For deep linear models, there is a recent paper that shows all local minima are global minima under some assumptions (which are not satisfied by models used in real tasks).

For deep neural network, it is partially backed up by Dauphin’s paper. Recent progress on statistical physics and random matrix theory show that the error surface of random Gaussian fields have interesting structure; most of local minima are close to global minima. Based on these results, Dauphin hypothesized that the error surface of neural network also follows a similar structure when the number of parameters is huge. This shows that the answer might be due to the scale of the neural network.

Dauphin’s arguments with regard to saddle points

Dauphin’s argument behind his proposed algorithm goes like this: “The reason why many algorithms seem to get stuck during training is because of saddle points surrounded by high error flat regions, which looks as if the algorithm is stuck at high error local minima. So we developed an algorithm that escapes from these high error flat regions.”

I wasn’t sure how realible the first part of their statement; optimizing deep neural network gets stuck at high error surface. It might be from the views based on past research (~2011) where we didn’t have effective tools like BN and ReLU. (Indeed, their experiments only deal with deep auto-encoder.)

I think as of now, we have an updated view on neural network optimization. In fact, Goodfellow & Vinyals (2015) show that typical deep network are easy to optimize and can achieve near-zero loss on the training set. (What’s hard is to find the ones with good generalization error, which will be discussed in Section 3.)

Although Dauphin’s claim for saddle points might not exactly work for deep neural network, this saddle points hypothesis was certainly a driving force for many interesting non-convex optim papers that recently appeared outside of deep learning community. One of the impressive results is this paper by Rong Ge, which provides the answer to the local v.s. global minima question in the context of Matrix Completion. The same author also shows that SGD converges to local minima in polynomial time for Tensor Factorization. Another approach is taken by John Wright, Micheal Jordan, etc, but I haven’t follow their papers too closely.

2. Degenerate Hessian

Most of the results to show “local minima are global minima”-type arugments assume that loss functions do not have degenerate Hessian. Degenerate Hessians are the ones that has more than one eigelvalue being 0. In deep neural network, we have degenerate Hessian everywhere, which is why we can’t simply apply the results from the above works to deep neural network.

(Side notes: this is also why deep learning is hard to analyze using classical statistics and decision theory framework, which heavily relies on the fact that Hessian being non-singular. This assures asymptotic normality for posterior distribution.)

The recent paper from Facebook discusses the consequence of degenerate Hessian specifically in deep learning, so I’ll summerize the main points in the follwing:

- Eigenvalue spectrum composes two parts: the bulk around 0 and the edges, where it is hypothesized that the former implies the over-parametrization of the model and the latter indicates the complexity of the input data.

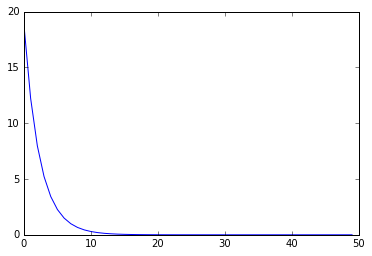

The recent paper by Chaudhari gives more details on the characteristics of the scale of the values at the edge of eigenvalue spectrum; they show that positive eigenvalues have a long tail, and negative eigenvalues have much faster decay.

According to Chaudhari, this trend is ubiquitous across a variety of network architectures, sizes, datasets, or optimization algorithms, which suggests that “local minima that generalize well and are discovered by gradient descent lie in “wide valleys” of energy landscape”, which are characterized by degenerate Hessians.

One important thing we need to remember is that our goal is not to find the global minima of the objective function of interest, but to find good enough minima that has high generalization performance. We don’t want to overfit our model to the training data.

Motivated by the observation in the above section, Chaudhari proposed Entropy-SGD, which is actively seeking flat regions (with low error), as opposed to Dauphin’s algorithm, which intentionally escapes from saddle points.

[details of Entropy-SGD will be followed.]

This paper provides a unified framework that generalizes Batch Normalization and other methods (path-normalized approach).

Is there theoretical argument as to the generalization power of Batch Normalization? I often heard the phrase like: “If you use BN, you don’t need to use Dropout.” This seems to be problem-dependent at least for me. Can we say something like, Batch Normalization allows the network to converge to flat regions with low error (and thus high generalization performance)?

4. 1st order v.s. 2nd order

If we could implement Natural Gradient Descent in a large-scale setting, then it’s ideal for good generalization performance and invariance properties.

(For details, see this paper

The issue is that we can’t really work with Natural Gradient because computing full Fisher information matrix is prohibitive, and at present it seems that the optimization algorithms based on approximation of Fisher information matrix are not working well enough to be competitive with Adam or tuned SGD, etc, which is why 2nd-order methods are active research area. Essentially, the matrix multiplied by the gradient vector in the GD update equation in the 2nd-order method can be considered as an approximation to Fisher information matrix, so the goal of 2nd-order methods research is (in a way) to come up with a computationally feasible method to approximate Fisher information matrix.

Martens and Pascanu have many interesting works on 2nd-order methods in deep neural network optimization. If you are interested in 2nd order methods, I highly recommend reading James Martens PhD thesis; it is a very good introduction and overview of the field.

Below, I’ll list some of the important papers:

I’ll cite some of the comments from the following Reddit thread with regards to why L-BFGS is not used in deep learning area very often:

https://www.reddit.com/r/MachineLearning/comments/4bys6n/lbfgs_and_neural_nets/

“Back in 2011 when that paper was published, deep learning honestly didn’t work all that well on many real tasks.

One of the hypotheses at the time (which has since been shown to be false) is the optimization

problem that neural nets posed was simply too hard – neural nets are non-convex, and

we didn’t have much good theory at the time to show that learning with them was possible.

That’s one of the reasons why people started exploring different optimization algorithms for neural nets, which was a trend that continued roughly until the breakthrough results in 2012, which worked remarkably well despite only using SGD + momentum. Since then, more theory has been developed supporting this, and other tricks have been developed (BatchNorm, RMSProp/Adagrad/Adam/Adadelta) that make learning easier.”

Future Directions: Optimization for Two-Player game

I think Plateau-finding type alogirhtms will keep appearing in 2017. How to characterize plateau efficiently will be a key. As for the 2nd-order method, how to construct non-diagonal scaling (that will be applied to gradient vector) with low per-iteration cost is a main challenge. (Notes to myself: can we use an idea from the hessian-sketch paper to construct something like Fisher-sketch, an approximation to (invertible) Fisher information matrix? )

Until now, we only talk about optimizing one objective function.

Generative Adversarial Network is a relatively new generative model that uses a two-player learning framework for high dimensional density estimation.

Although it already produces impressive results in generating images, there are several issues. One such problem is that training GAN is notroiusly hard due to the two-player nature; optimizing one function is not necessary an optimal update for the other function. Effective training scheme for GAN is certainly open for future research.

[Things to add: # Small minibatches are better for generalization]

[I should write a post for each algorithm/paper that appears in this post and just add a link for each so that this post can be more concise / easier to mantain?]